Accelerating Pandas Operations with NVIDIA cuDF: A Performance Comparison

- Ryan Mardani

- Oct 23, 2024

- 3 min read

As data volumes grow exponentially, the need for faster data processing becomes critical. Traditional CPU-based data manipulation libraries like pandas can become bottlenecks when dealing with large datasets. Enter NVIDIA cuDF, a GPU-accelerated library that offers a pandas-like API, harnessing the power of NVIDIA GPUs to significantly speed up data operations.

In this blog post, we explore how cuDF can accelerate common pandas operations. We'll compare performance results from NVIDIA's tests with our own, conducted on different hardware configurations and datasets.

Introduction to cuDF

cuDF is a part of the RAPIDS suite of libraries designed to accelerate data science pipelines using GPUs. It provides a pandas-like interface, making it easy for data scientists to leverage GPU acceleration without steep learning curves.

Key Features of cuDF:

High Performance: Accelerated data manipulation using NVIDIA GPUs.

Familiar API: pandas-like syntax for seamless transition.

Integration: Works with other RAPIDS libraries for end-to-end GPU-accelerated workflows.

NVIDIA's Performance Tests

NVIDIA conducted performance tests using cuDF and pandas on large datasets to showcase the acceleration achieved with GPU computing.

Test Machine Specifications:

GPU: NVIDIA RTX A6000

CPU: Intel(R) Xeon(R) Silver 4110 CPU @ 2.10GHz

RAPIDS Version: 23.02 with CUDA 11.5

Dataset Size: Approximately 50 million to over 100 million rows with less than 10 columns.

Performance Results:

Test 1: Dataset with ~50 Million Rows

Function | GPU Time (s) | CPU Time (s) | GPU Speedup |

read | 1.977145 | 69.033193 | 34.92x |

slice | 0.030406 | 13.349222 | 439.03x |

na | 0.076090 | 8.246114 | 108.37x |

dropna | 0.242239 | 9.784584 | 40.39x |

unique | 0.013432 | 0.445705 | 33.18x |

dropduplicate | 0.233920 | 0.518868 | 2.22x |

group_sum | 0.672500 | 7.850392 | 11.67x |

Test 2: Dataset with >100 Million Rows

Function | GPU Time (s) | CPU Time (s) | GPU Speedup |

read | 4.391139 | 117.607004 | 26.78x |

drop | 0.184182 | 3.340470 | 18.14x |

diff | 0.131384 | 16.044269 | 122.12x |

select | 0.071510 | 62.890464 | 879.46x |

resample | 0.347972 | 9.892627 | 28.43x |

Insights:

Massive Speedups: Operations like select and slice showed speedups of over 400x and 800x respectively.

I/O Operations: Reading large datasets saw significant improvements, with speedups of up to 34.92x.

Consistency Across Operations: Most functions benefited from GPU acceleration, although the degree varied.

Our Performance Tests

To validate NVIDIA's findings and explore how cuDF performs on different hardware and datasets, we conducted our own tests.

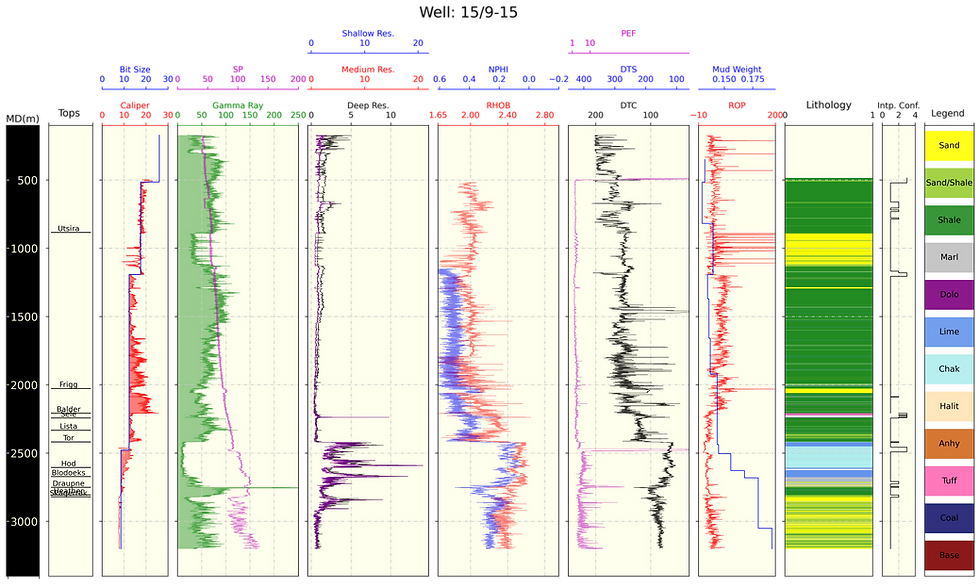

Image Credit: NVIDIA

Our Machine Specifications (Using WSL2):

GPU: NVIDIA GeForce RTX 3080 Ti

CPU: Intel(R) Core(TM) i7-10700 CPU @ 2.90GHz

RAPIDS Version: 24.12 with CUDA 12.7

Dataset: Oil and gas dataset with approximately 5 million rows and less than 10 columns.

Performance Results:

Function | GPU Time (s) | CPU Time (s) | GPU Speedup |

select | 0.021315 | 0.645474 | 30.28x |

slice | 0.079916 | 0.723981 | 9.06x |

dropna | 0.071798 | 0.266679 | 3.71x |

diff | 0.222172 | 0.799267 | 3.60x |

unique | 0.022457 | 0.020739 | 0.92x |

dropduplicate | 0.035064 | 0.032213 | 0.92x |

drop | 0.591526 | 0.524621 | 0.89x |

read | 14.689647 | 11.922209 | 0.81x |

resample | 11.344053 | 1.237873 | 0.11x |

Observations:

Significant Speedups: Operations like select and slice showed speedups of 30x and 9x, respectively.

Mixed Results: Some operations like unique, dropduplicate, drop, read, and resample did not show speedups and, in some cases, were slower on the GPU.

Dataset Size Impact: The smaller dataset size (5 million rows) compared to NVIDIA's tests may have influenced the performance gains.

Analysis and Insights

Factors Affecting Performance

Dataset Size:

Larger Datasets Benefit More: GPU acceleration shines with larger datasets due to the overhead of data transfer between CPU and GPU memory.

Smaller Datasets Overhead: For smaller datasets, the overhead can outweigh the performance gains.

Operation Complexity:

Compute-Intensive Operations: Functions that are computationally heavy benefit more from GPU acceleration.

I/O Operations: Reading data may not always see speedups if disk I/O becomes the bottleneck.

Hardware Differences:

GPU Model: The RTX A6000 (NVIDIA's test) vs. RTX 3080 Ti (our test) have different specifications that can affect performance.

CPU Performance: CPU capabilities can also influence the relative speedup observed.

Understanding the Mixed Results

Negative Speedups: Operations where GPU time exceeds CPU time result in a speedup factor less than 1.

Possible Causes:

Data Transfer Overhead: Moving data to and from the GPU can introduce latency.

Operation Overheads: Some functions may not be fully optimized for GPU execution in cuDF.

Practical Implications

When to Use cuDF:

Large Datasets: Ideal for datasets with tens or hundreds of millions of rows.

Compute-Intensive Tasks: Operations that are heavy on computation rather than I/O.

When to Stick with pandas:

Small Datasets: For smaller datasets, pandas may be sufficient and more efficient.

Simple Tasks: For quick, simple manipulations, the overhead of GPU acceleration may not be justified.

References:

Disclaimer: The performance results presented are based on specific hardware and datasets. Actual performance may vary based on system configuration and data characteristics.

Opmerkingen